Section: New Results

Interaction and Design for Virtual Environments

Diffusion Curves: A Vector Representation for Smooth-Shaded Images

Participant : Adrien Bousseau.

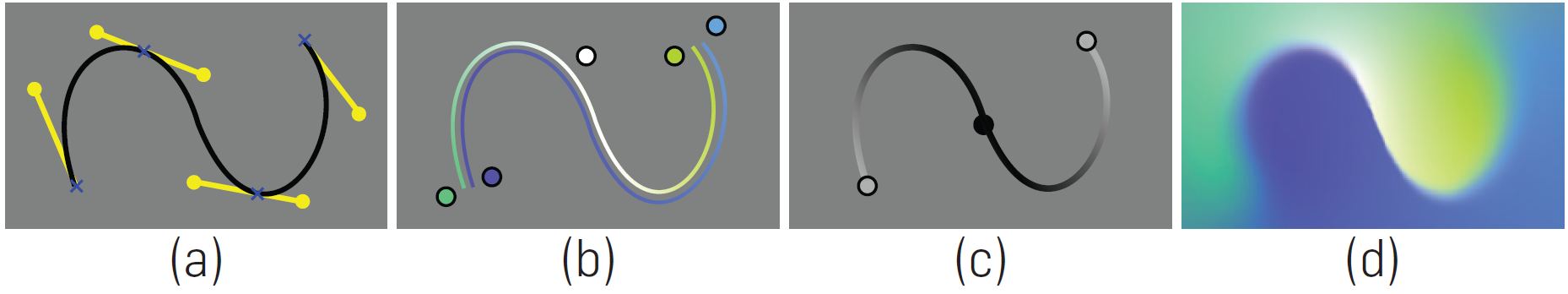

This paper was selected for presentation in the Communications of the ACM, as an important graphics research result of interest to the entire Computer Science community. We describe a new vector-based primitive for creating smooth-shaded images, called the diffusion curve. A diffusion curve partitions the space through which it is drawn, defining different colors on either side. These colors may vary smoothly along the curve. In addition, the sharpness of the color transition from one side of the curve to the other can be controlled. Given a set of diffusion curves, the final image is constructed by solving a Poisson equation whose constraints are specified by the set of gradients across all diffusion curves (Figure 7 ). Like all vector-based primitives, diffusion curves conveniently support a variety of operations, including geometry-based editing, keyframe animation, and ready stylization. Moreover, their representation is compact and inherently resolution independent. We describe a GPU-based implementation for rendering images defined by a set of diffusion curves in real time. We then demonstrate an interactive drawing system allowing artists to create artwork using diffusion curves, either by drawing the curves in a freehand style, or by tracing existing imagery. Furthermore, we describe a completely automatic conversion process for taking an image and turning it into a set of diffusion curves that closely approximate the original image content.

|

This work is a collaboration with Alexandrina Orzan, Pascal Barla (Inria / Manao), Holger Winnemöller (Adobe Systems), Joëlle Thollot (Inria / Maverick) and David Salesin (Adobe Systems). This work was originally published in ACM Transactions on Graphics (Proceeding of SIGGRAPH 2008) and was selected for publication in Communications of the ACM July 2013 [15] .

Natural Gesture-based Interaction for Complex Tasks in an Immersive Cube

Participants : Emmanuelle Chapoulie, George Drettakis.

We present a solution for natural gesture interaction in an immersive cube in which users can manipulate objects with fingers of both hands in a close-to-natural manner for moderately complex, general purpose tasks. Our solution uses finger tracking coupled with a real-time physics engine, combined with a comprehensive approach for hand gestures, which is robust to tracker noise and simulation instabilities. To determine if our natural gestures are a feasible interface in an immersive cube, we perform an exploratory study for tasks involving the user walking in the cube while performing complex manipulations such as balancing objects. We compare gestures to a traditional 6-DOF Wand, and we also compare both gestures and Wand with the same task, faithfully reproduced in the real world. Users are also asked to perform a free task, allowing us to observe their perceived level of presence in the scene. Our results show that our robust approach provides a feasible natural gesture interface for immersive cube-like environments and is perceived by users as being closer to the real experience compared to the Wand.

This work is a collaboration with Jean-Christophe Lombardo of SED, with Evanthia Dimara and Maria Roussou from the University of Athens and with Maud Marchal from IRISA-INSA/Inria Rennes - Bretagne Atlantique. The work is under review in the journal Virtual Reality.

Evaluation of Direct Manipulation in an Immersive Cube: a Controlled Study

Participants : Emmanuelle Chapoulie, George Drettakis.

We are pursuing a study for interaction using finger tracking and traditional 6 degrees of freedom (DOF) flysticks in a virtual reality immersive cube. Our study aims at identifying which factors make one interface better than the other and which are the tradeoffs for the design of experiments, thus decomposing the movements into restricted DOF.

The Drawing Assistant: Automated Drawing Guidance and Feedback from Photographs

Participants : Emmanuel Iarussi, Adrien Bousseau.

|

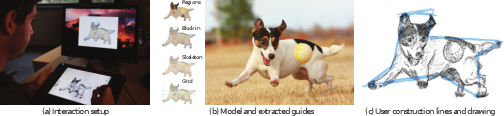

Drawing is the earliest form of visual depiction and continues to enjoy great popularity with paint systems. However, drawing requires artistic skills that many people feel out of reach. We developed an interactive drawing tool that provides automated guidance over model photographs to help people practice traditional drawing-by-observation techniques. The drawing literature describes a number of techniques to help people gain consciousness of the shapes in a scene and their relationships. We compile these techniques and derive a set of construction lines that we automatically extract from a model photograph (see Figure 8 ). We then display these lines over the model to guide its manual reproduction by the user on the drawing canvas. Our pen-based interface also allows users to navigate between the techniques they wish to practice and to draw construction lines in dedicated layers. We use shape-matching to register the user’s sketch with the model guides. We use this registration to provide corrective feedback to the user. We conducted two user studies to inform the design of our tool and evaluate our approach with a total of 20 users. Participants produced better drawings using the drawing assistant, with more accurate proportions and alignments. They also perceived that guidance and corrective feedback helped them better understand how to draw. Finally, some participants spontaneously applied the techniques when asked to draw without our tool after using it for about 30 minutes.

This work is a collaboration with Theophanis Tsandilas from the InSitu project team - Inria Saclay, in the context of the ANR DRAO project. It has been published at proceedings of UIST 2013 the 26th annual ACM symposium on User interface software and technology [19] .

Shape-Aware Sketch Editing with Covariant-Minimizing Cross Fields

Participants : Emmanuel Iarussi, Adrien Bousseau.

Free-hand sketches are extensively used in product design for their ability to convey 3D surfaces with a handful of pen strokes. Skillful artists capture all surface information by strategically positioning strokes so that they depict the feature lines and curvature directions of surface patches. Viewers envision the intended 3D surface by mentally interpolating these lines to form a dense network representative of the curvature of the shape. Our goal is to mimic this interpolation process to estimate at each pixel of a sketch the projection of the two principal directions of the surface, or their extrapolation over umbilic regions. While the information we recover is purely 2D, it provides a vivid sense of the intended 3D surface and allows various shape-aware sketch editing applications, including normal estimation for shading, cross-hatching rendering and surface parameterization for texture mapping.

This work is a collaboration with David Bommes from the Titane project team, Sophia-Antipolis.

Depicting Stylized Materials with Vector Shade Trees

Participants : Jorge Lopez-Moreno, Stefan Popov, Adrien Bousseau, George Drettakis.

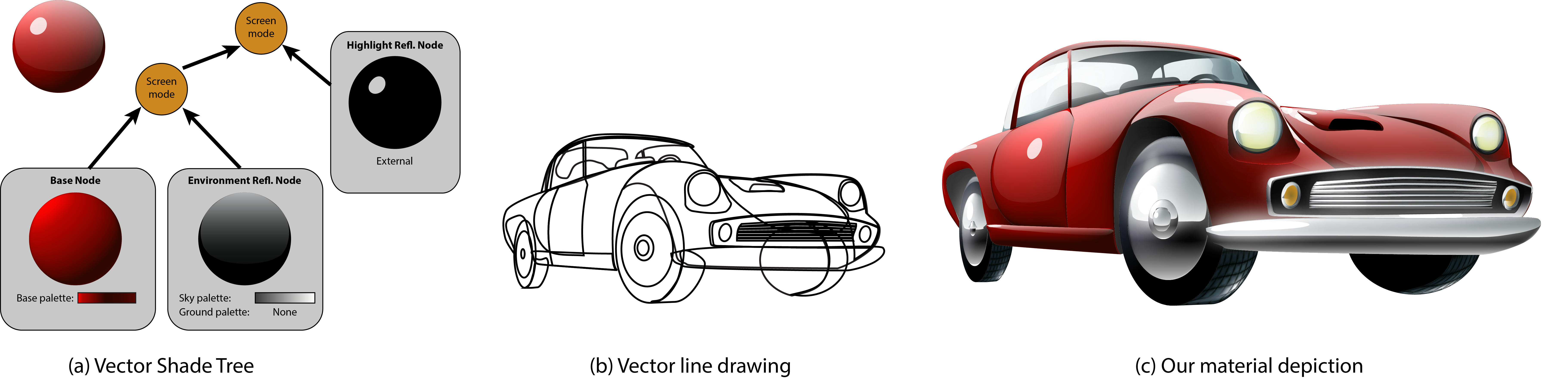

Vector graphics represent images with compact, editable and scalable primitives. Skillful vector artists employ these primitives to produce vivid depictions of material appearance and lighting. However, such stylized imagery often requires building complex multi-layered combinations of colored fills and gradient meshes. We facilitate this task by introducing vector shade trees that bring to vector graphics the flexibility of modular shading representations as known in the 3D rendering community. In contrast to traditional shade trees that combine pixel and vertex shaders, our shade nodes encapsulate the creation and blending of vector primitives that vector artists routinely use. We propose a set of basic shade nodes that we design to respect the traditional guidelines on material depiction described in drawing books and tutorials. We integrate our representation as an Adobe Illustrator plug-in that allows even inexperienced users to take a line drawing, apply a few clicks and obtain a fully colored illustration. More experienced artists can easily refine the illustration, adding more details and visual features, while using all the vector drawing tools they are already familiar with. We demonstrate the power of our representation by quickly generating illustrations of complex objects and materials.

Figure 9 illustrates how our algorithm works. We use a combination of basic shade nodes composed of vector graphics primitives to describe Vector Shade Trees that represent stylized materials (a). Combining these nodes allows the depiction of a variety of materials while preserving traditional vector drawing style and practice. We integrate our vector shade trees in a vector drawing tool that allows users to apply stylized shading effects on vector line drawings (b,c).

This work is a collaboration with Maneesh Agrawala from University of California, Berkeley in the context of the CRISP Associated Team. The work was accepted as a SIGGRAPH 2013 paper and published in ACM Transactions on Graphics, volume 32, issue 4 [14] .

Auditory-Visual Aversive Stimuli Modulate the Conscious Experience of Fear

Participants : Rachid Guerchouche, George Drettakis.

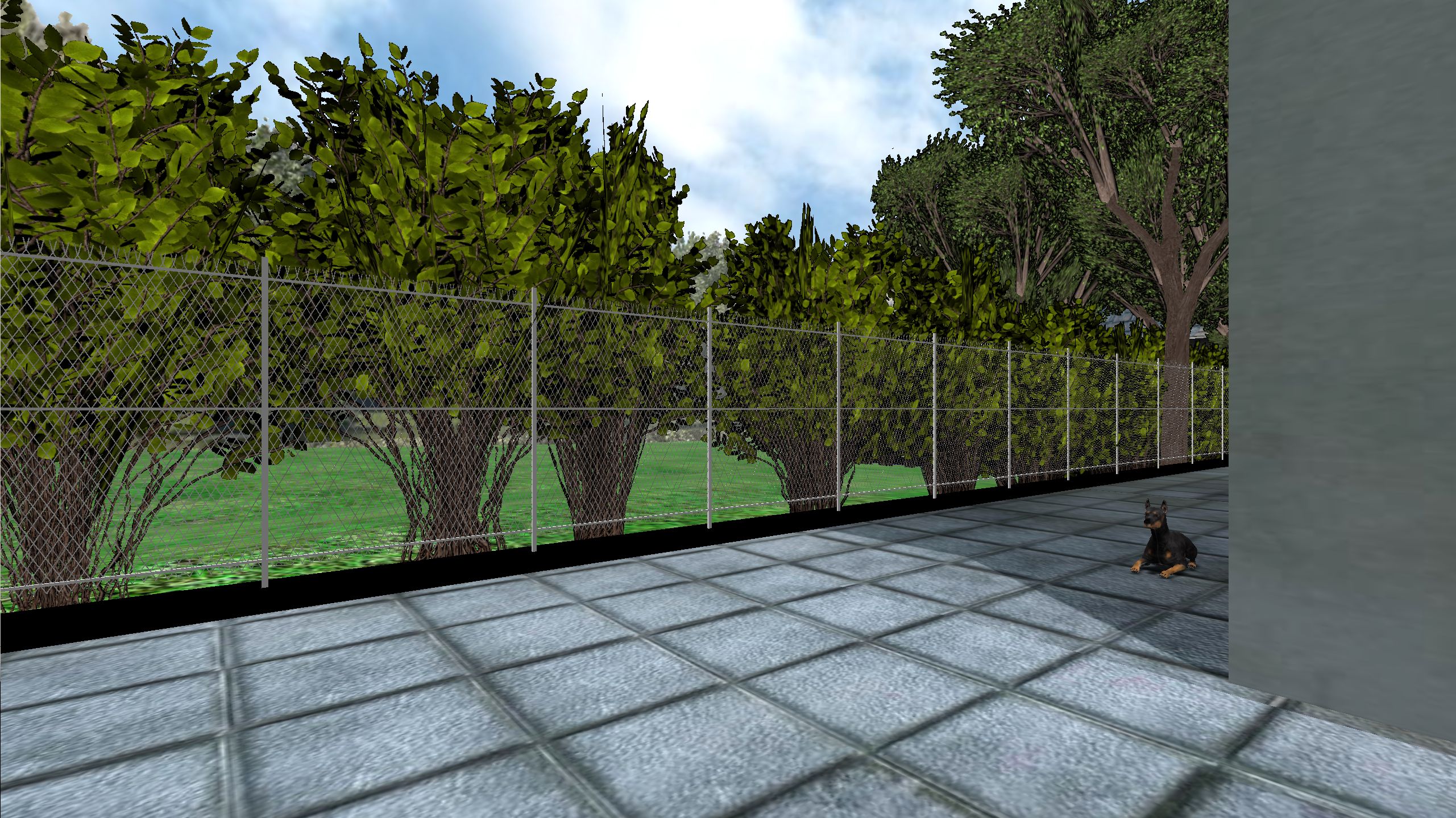

In a natural environment, affective information is perceived via multiple senses, mostly audition and vision. However, the impact of multisensory information on affect remains relatively undiscovered. In this study, we investigated whether the auditory-visual presentation of aversive stimuli influences the experience of fear. We used the advantages of virtual reality to manipulate multisensory presentation and to display potentially fearful dog stimuli embedded in a natural context. We manipulated the affective reactions evoked by the dog stimuli by recruiting two groups of participants: dog-fearful and non-fearful participants. The sensitivity to dog fear was assessed psychometrically by a questionnaire and also at behavioral and subjective levels using a Behavioral Avoidance Test (BAT). Participants navigated in virtual environments, in which they encountered virtual dog stimuli presented through the auditory channel, the visual channel or both. They were asked to report their fear using Subjective Units of Distress. We compared the fear for unimodal (visual or auditory) and bimodal (auditory-visual) dog stimuli. Dog-fearful participants as well as non-fearful participants reported more fear in response to bimodal audiovisual compared to unimodal presentation of dog stimuli. These results suggest that fear is more intense when the affective information is processed via multiple sensory pathways, which might be due to a cross-modal potentiation. Our findings have implications for the field of virtual reality-based therapy of phobias. Therapies could be refined and improved by implicating and manipulating the multisensory presentation of the feared situations.

|

This work is a collaboration with Marine Taffou and Isabelle Viaud-Delmon from CNRS-IRCAM, in the context of the European project VERVE. The work was published in the Multisensory Research journal 2013 [17] .

Memory Motivation Virtual Experience

Participants : Emmanuelle Chapoulie, Rachid Guerchouche, George Drettakis.

Memory complaints are known to be one the first stages of Alzheimer's disease, for which -up to now, there is no known chemical treatment. In the context of the European project VERVE, and in collboartion with the Resources and Research Memory Centre of Nice Hospital (CM2R), we performed a study on the feasibility of treating memory complaints using realistic immersive virtual environments. Such environments are created using Image-Based Rendering technique developed by REVES. It is possible to easily provide, realistic 3D environments of places familiar to the participants using only a few photograph, and investigate whether IBR virtual environments can convey familiarity.

This work is a collaboration with Pierre-David Petit and Pr. Philippe Robert from CM2R. The work will be presented in IEEE Virtual Reality conference 2014 and will be published in the conference proceedings.

Layered Image Vectorization

Participants : Christian Richardt, Adrien Bousseau, George Drettakis.

Vector graphics enjoy great popularity among graphic designers for their compactness, scalability and editability. The goal of vectorization algorithms is to facilitate the creation of vector graphics by converting bitmap images into vector primitives. However, while a vectorization algorithm should faithfully reproduce the appearance of a bitmap image, it should also generate vector primitives that are easily editable – a goal that existing methods have largely overlooked. We investigate layered representations which are more compact and editable, and hence better preserve the strengths of vector graphics. This work is in collaboration with Maneesh Agrawala in the context of the CRISP Associated Team and Jorge Lopez-Moreno, now a postdoc at the University of Madrid.

True2Form: Automatic 3D Concept Modeling from Design Sketches

Participants: Adrien Bousseau.

We developed a method to estimate smooth 3D shapes from design sketches. We do this by hypothesizing and perceptually validating a set of local geometric relationships between the curves in sketches. We then algorithmically reconstruct 3D curves from a single sketch by detecting their local geometric relationships and reconciling them globally across the 3D curve network.

This work is a collaboration with James McCrae and Karan Singh from the University of Toronto and Xu Baoxuan, Will Chang and Alla Sheffer from the University of British Columbia.